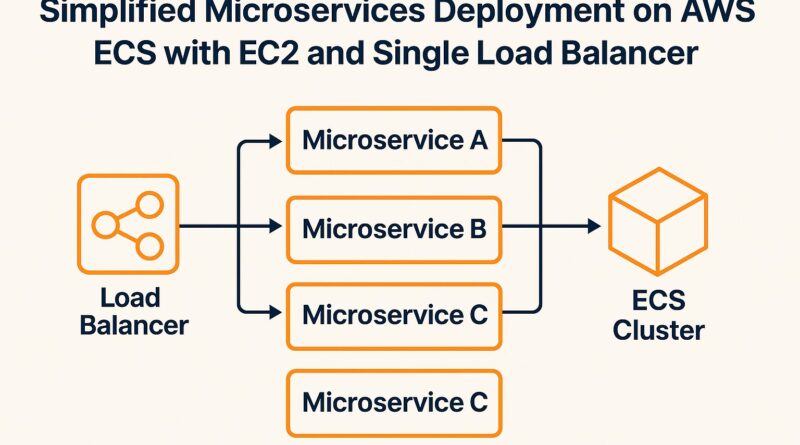

Simplified Microservices Deployment on AWS ECS

Microservices architecture is increasingly popular for building scalable and resilient applications. However, deploying microservices can be challenging, especially when managing multiple services in the cloud. AWS provides a robust set of tools, including Amazon ECS (Elastic Container Service) and EC2 (Elastic Compute Cloud), to simplify the process. In this guide, we will show you how to deploy microservices on AWS ECS with EC2 instances and a single load balancer, reducing the complexity of managing multiple microservices and streamlining your deployment.

What is AWS ECS?

Amazon Elastic Container Service (ECS) is a highly scalable container orchestration service that makes it easy to run and manage Docker containers on AWS. With ECS, you can deploy microservices and applications on a cluster of EC2 instances or Fargate (AWS’s serverless compute engine). ECS handles the complexity of scheduling, scaling, and managing containerized applications.

Using ECS, you can automate your microservices deployment, enabling you to focus on building applications instead of worrying about infrastructure.

How EC2 Instances Fit into Microservices Deployment

EC2 instances are virtual servers in the cloud. When deploying microservices using AWS ECS, you need EC2 instances to run your containers. EC2 provides scalable compute power, enabling you to handle high traffic loads and support multiple microservices.

There are two primary ways to run containers in ECS:

- EC2 Launch Type: You manage the EC2 instances that run your containers.

- Fargate Launch Type: AWS manages the infrastructure for you, and you only focus on containers.

For this guide, we will use the EC2 Launch Type because it gives you full control over your EC2 instances and is cost-effective for workloads with predictable usage patterns.

Configuring a Single Load Balancer for Multiple Microservices

When deploying multiple microservices, a load balancer is essential to distribute traffic evenly across your containers. A load balancer ensures that requests are routed to healthy instances, optimizing application performance and reliability.

In this setup, we will configure an Application Load Balancer (ALB) to handle HTTP(S) traffic for all microservices. The ALB will route traffic based on URL paths to the corresponding ECS services.

Benefits of Using a Single Load Balancer

- Simplified Routing: A single ALB can route traffic to different services based on their paths, such as

/api/usersfor a user service and/api/ordersfor an order service. - Cost-Effective: Managing one load balancer for all microservices reduces costs compared to using separate load balancers for each service.

- Centralized Management: With a single ALB, you can manage SSL certificates, security groups, and routing rules from one central location.

Best Practices for Simplified Microservices Deployment

When deploying microservices on AWS ECS, following best practices is crucial to ensure high availability, scalability, and efficient management. Here are some key best practices:

1. Use Task Definitions and Services

In ECS, task definitions define how your containers should run, including the Docker image, CPU, memory, and environment variables. Services ensure that the desired number of tasks (containers) are always running and handle auto-scaling.

2. Enable Auto Scaling

To handle fluctuating traffic, enable ECS auto-scaling for both EC2 instances and your ECS services. This ensures that the correct number of containers are running based on the load.

3. Use CloudWatch for Monitoring

Amazon CloudWatch helps you monitor the health of your ECS services, load balancer, and EC2 instances. Set up alarms to alert you when any metrics exceed thresholds, such as high CPU usage or low request counts.

4. Secure Your Microservices

Use AWS IAM roles to manage access to resources securely. Additionally, configure the ALB with HTTPS to ensure encrypted communication between clients and your services.

Step-by-Step Guide to Deploying Microservices on ECS with EC2 and a Load Balancer

Now that we’ve covered the basics, let’s walk through the steps for deploying microservices on ECS with EC2 instances and a single load balancer.

Step 1: Create an ECS Cluster

- Go to the ECS Console: In the AWS Management Console, navigate to ECS.

- Create Cluster: Click “Create Cluster” and select the EC2 launch type.

- Configure Cluster: Enter a name for your cluster (e.g., “microservices-cluster”) and choose an instance type for the EC2 instances (e.g., t3.medium).

Step 2: Define a Task Definition

- Create a Task Definition: In the ECS console, create a new task definition for each microservice. Specify the Docker image, resource requirements (CPU and memory), and environment variables for each microservice.

- Container Definitions: Include all necessary container ports, such as 8080 for HTTP traffic.

Step 3: Set Up the Load Balancer

- Create an Application Load Balancer (ALB): In the AWS console, create a new ALB.

- Define Listeners and Target Groups: Configure HTTP listeners and set up target groups for each microservice. Each target group should correspond to an ECS service.

- Configure Health Checks: Set up health checks for each target group to ensure the load balancer only routes traffic to healthy containers.

Step 4: Launch the ECS Services

- Create Services: For each microservice, create an ECS service. Link the service to the previously defined task definition and target group.

- Set Desired Count: Set the desired number of tasks (containers) to run for each service.

- Attach Load Balancer: Ensure the ECS service is connected to the ALB so traffic can be routed to the containers.

Step 5: Test the Deployment

After deployment, test the microservices by accessing them via the ALB’s public URL. Each service should be reachable via its assigned path on the ALB.

Frequently Asked Questions (FAQs)

1. What is AWS ECS and how does it help with microservices deployment?

AWS ECS (Elastic Container Service) is a fully managed container orchestration service by Amazon. It simplifies the deployment and management of microservices by running Docker containers on EC2 or Fargate, allowing easy scaling and monitoring.

2. Why use EC2 with ECS instead of AWS Fargate?

EC2 gives you more control over your infrastructure and can be more cost-effective for predictable workloads. Fargate is serverless and great for dynamic workloads, but EC2 is ideal if you want custom instance types or need to manage the environment closely.

3. Can I deploy multiple microservices with one load balancer in AWS ECS?

Yes, using an Application Load Balancer (ALB), you can route traffic to multiple ECS services based on path-based routing (e.g., /user, /orders). This reduces complexity and cost.

Conclusion: Simplify Your Microservices Deployment with AWS ECS

Deploying microservices on AWS ECS with EC2 instances and a single load balancer offers a simplified, scalable solution for managing containerized applications. By following best practices and using ECS features like task definitions, auto-scaling, and monitoring with CloudWatch, you can achieve a highly available and efficient architecture.

This approach ensures that you can handle growing traffic without worrying about managing multiple load balancers or servers. With a single load balancer, you gain centralized control, reduced costs, and better routing performance. Start using ECS today and streamline your microservices deployment!

Want to Dive into Other Trending Tech Topics in 2025?

Explore more of our expert-written guides and insights:

- How to Create a Smart Contract on Ethereum: Step-by-Step Guide – A beginner-friendly tutorial to launch your first smart contract.

- GitHub Actions vs. Jenkins for Beginners: What to Use in 2025? – Discover which CI/CD tool is right for your next project.

- How Blockchain is Revolutionizing Supply Chain Management in 2025 – Understand the game-changing role of blockchain in logistics.

Pingback: Top 10 Blockchain Testnets For Developers In 2025

Pingback: Full-Stack Blockchain Developer Roadmap 2025